Face Tracking

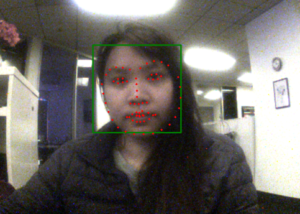

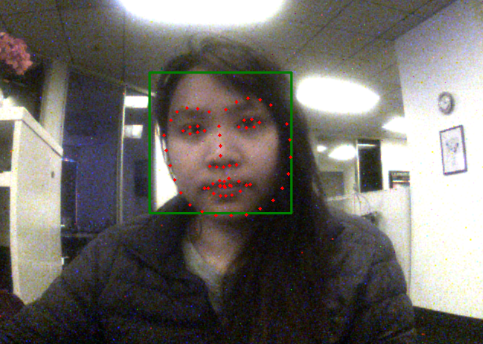

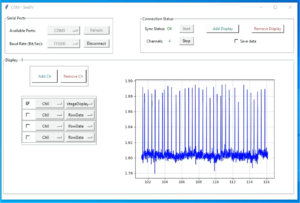

Face tracking in this algorithm is performed using OpenCV’s Haar cascade classifier for initial face detection, followed by Dlib-based landmark detection to extract detailed facial features. The function begins by converting the input grayscale image into an 8-bit format, as OpenCV processes images in 8-bit depth. The grayscale version is then passed through OpenCV’s face detection model, which identifies bounding boxes around faces based on pre-trained features. These detected faces are further processed with Dlib’s shape predictor, which maps landmark points on facial regions such as the eyes, nose, and lips.

Once faces are detected, the algorithm updates the tracked face list, ensuring real-time tracking across frames. The system then draws bounding boxes around the detected faces and overlays landmark points, visualizing key facial features. If a face region is too large, it is resized to a fixed 150×150 resolution before passing it to Dlib, preventing memory issues. Finally, the processed frame is converted into a WriteableBitmap and displayed in the UI using Dispatcher, enabling real-time facial recognition and tracking. This method is effective for biometric authentication, facial expression analysis, emotion detection, and AR/VR applications, where detailed face tracking is crucial.