Depth Anything and MiDaS are both powerful monocular depth estimation models, but they cater to different needs. Depth Anything is designed for absolute depth estimation, making it suitable for real-world applications like robotics, 3D reconstruction, AR/VR, and autonomous navigation. It leverages transformer-based architecture and large-scale datasets to deliver high accuracy and generalization across various scenes. However, it requires a high-end GPU for optimal performance.

On the other hand, MiDaS focuses on relative depth estimation, excelling in image-based depth perception, SLAM, and artistic applications. It offers lighter models that can run on mid-range GPUs or even CPUs, making it a more accessible option for applications that don’t require absolute depth values. While Depth Anything provides better precision for real-world depth measurement, MiDaS is faster and more efficient for general depth perception tasks. The choice depends on absolute depth accuracy or computational efficiency.

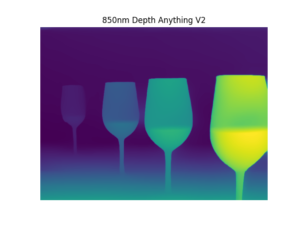

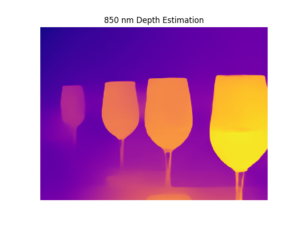

Depth estimation in this algorithm is achieved using the Depth Anything model, which processes a 16-bit grayscale depth image and maps it to a real-world depth representation. The function first converts the raw depth data into an 8-bit grayscale format, ensuring that the values are normalized within the expected input range of the model. The grayscale image is then resized to 518×518, matching the required input dimensions for the ONNX-based Depth Anything v2 model. Before inference, the image undergoes preprocessing, where each pixel is normalized using ImageNet mean and standard deviation values for the three RGB channels. The model processes this tensor through an ONNX inference session, generating a depth map where each pixel represents an estimated distance value.

To enhance visualization, the algorithm applies min-max normalization to scale the depth values between 0 and 255, making them suitable for display. A Jet colormap is then applied, converting the grayscale depth map into a colorized representation, where warmer colors indicate closer distances and cooler colors represent farther depths. To improve depth interpretation, a depth scale bar is generated and overlaid on the image, providing reference values from minimum to maximum depth in meters. Additionally, real-time UI updates are handled using Dispatcher, ensuring smooth rendering in a graphical application. This approach allows for accurate depth estimation and visualization, making it ideal for applications such as robotics, AR/VR, 3D reconstruction, and autonomous navigation, where precise depth perception is essential.

- Date: January 25, 2020

- Categories: AI & Machine Learning

- wannakorn sangthongngam Depth Estimation